With the "Deus Ex Machina? — Testing AI Tools"-series we want to show you different tools, that aim to simplify writing, design and research by using Artificial Intelligence. More on the "Deus Ex Machina?"-series can be found here.

Overview

It sounds like the dream of many students and scientists: Simply typing in a question and being told within seconds how scientific research would answer it, without having to work your way through long, highly complex papers. But this dream seems to have come true — at least if you believe the developers of the AI tool Consensus. Consensus claims to be just that: an AI-based search engine that makes the world's academic knowledge more accessible to its users.

The theory behind the tool is simple: you type in a question — just like in other search engines — and receive answers in natural language. However, these are not generated from random sources, such as the most clicked websites on a topic, but only from the best answers that science currently has to offer. Consensus claims to cover over 80 different scientific fields.

The basis for the generated results is the Semantic Scholar Database, in which over 200 million scientific papers — studies and theoretical texts — can be found. According to Consensus, the database is constantly being expanded. Thanks to this foundation and its own LLMs and search technologies, Consensus — unlike ChatGPT, for example — does not provide its users with the most likely answer, but the most scientifically correct one.

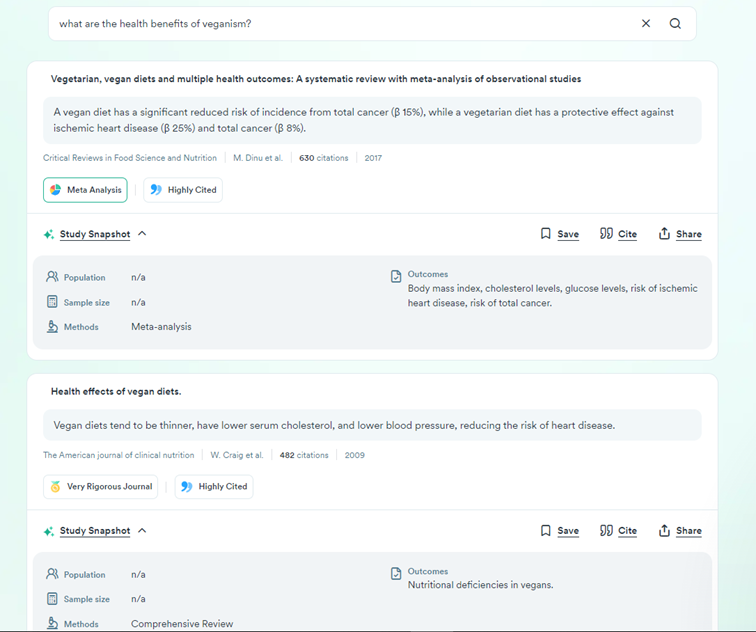

The answers are presented in natural language and based on specific papers, which can also be viewed and from which direct quotes are displayed. The 20 most suitable papers are used for answering each question.

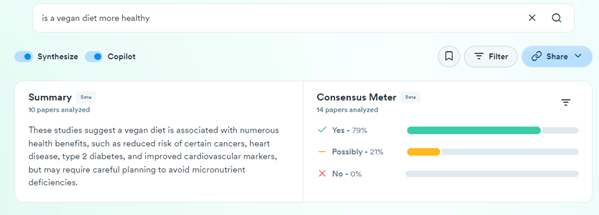

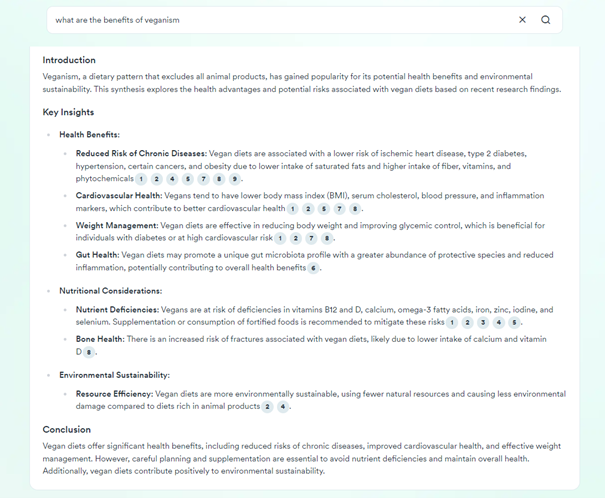

So much for the basic principle of the tool. In addition, the search can be simplified and refined by numerous add-ons. Consensus, for example, offers a summary of the ten best papers, which in turn provide an answer to the question posed. For questions that allow clear yes/no answers, the so-called Consensus Meter can also be used to quickly see whether there is a scientific consensus on a topic or whether it is more controversial. The Consensus copilot breaks down the question posed into individual core elements or core topics and answers these — with references — on the basis of the papers used.

Consensus also offers a so-called study snapshot. This allows central elements of the study design, such as the methods, sample size, investigated group, etc., to be viewed at a glance, allowing quick conclusions to be drawn about the significance of the study. In our test, however, the study snapshot only provided information for around half of the results provided. Information was missing for the rest.

You can also refine your search with Consensus by using specific filters that allow the program to output only selected papers. For example, you can filter by sample size, methodology, open access publications, study design and more. Consensus also provides the responses with its own quality indicators, allowing a focus on the best papers. For instance, the number of citations, the quality of the journal in which the paper was published or the study type are evaluated.

These functionalities already make it clear that Consensus focuses primarily on natural science and scientific research and is adapted to this scientific system accordingly. The company does state that it also includes non-scientific subjects and in our test we also received answers to questions relating to the humanities. However, it is clear that the tool reaches its limits the less empirically a science works. Consensus is trained to provide precisely formulated answers, which can rarely be given in humanities research. For smaller research areas, such as rhetoric, the results are even less meaningful. This is where the gaps in the database become apparent. The same applies to subjects that do not primarily publish in English, such as national philologies.

Consensus can be used free of charge after registering via a Google account or email address, but the in-depth search options are very limited and users only have access to a small number of questions, which are answered with Consensus Meter, Summary and Copilot. If you want permanent access to the full scope, you have to take out a subscription. The company was founded in 2021 by Christian Salem and Eric Olson, both alumni of Northwestern University in Illinois. According to the founders, this is also where the idea for Consensus was born: Using AI to make science more accessible to everyone. Consensus was finally launched at the end of 2022, shortly before the release of ChatGPT.

The AI behind the application

Infobox: RAG

Retrieval Augmented Generation (RAG) is a process that increases the reliability of Large Language Models (LLM), makes the results more specific and eliminates undesirable side effects such as hallucinations. Usually, an LLM draws the knowledge with which it operates and responds to prompts from the data set with which it was trained. The knowledge is generated implicitly and is usually sufficient to answer general questions. However, if the prompts require specific knowledge, an LLM may answer unspecifically or invent knowledge, an effect that is widely known among researchers as “hallucination”. With RAG, additional sources of knowledge are added to the LLM, which it can access and as a result is no longer dependent on the implicitly generated knowledge from the training data. In this way, RAG allows specific knowledge to be fed into an LLM, making it more reliable.

But how exactly does the tool work? Consensus contains various AI applications that support the tool. The developers themselves describe how Consensus works as an assembly line. More than 25 different Large Language Models (LLM) work together at various stages of the process to output the final results. Consensus also operates with additional vector and keyword searches, which generate specific metadata.

In addition to classic Retrieval Augmented Generation (RAG), which makes Consensus's results more reliable and valid, the company is also pursuing a newer approach. Before specific data is retrieved, additional metadata is first generated that could be useful in the tool's further work process. To a certain extent, this is a reversal of RAG, i.e. generation augmented retrieval, as the company itself states.

This complex interplay of technologies ranks the available sources from the databases and ultimately only uses those that are considered the best according to specific criteria (study design, publication date, journal, number of citations, etc.). For individual functions, such as summarizing, Consensus uses GPT4 from OpenAI.

The rhetorical potential of the tool

Consensus is particularly effective for the aspect of rhetoric known as logos — persuasion through sound, substantive arguments. Arguments are only credible and therefore effective if they can be substantiated. Sound reasons can be found in scientific findings and studies and arguments can thus be built convincingly. Consensus facilitates access to these findings and summarizes scientific consensus precisely. If you take the results of the Consensus Meter from the example above as an example, an argument could look like this: “A vegan diet is healthier for humans than an omnivorous diet, as confirmed by more than 70% of current studies on the topic.”

This is much more convincing than a simple: “According to current studies, a vegan diet is healthier for humans than an omnivorous diet.” This is because figures and data create evidence effects (rhetorically evidentia) and convince the addressee on a different level, as concrete facts can be referred to.

Of course, you don't necessarily need Consensus to build such arguments and incorporate scientific results into them, but the tool saves a good deal of research time. Finding the arguments — called inventio — is much faster thanks to Consensus. However, the tool also offers advantages in the elocutio, the formulation of the material, as Consensus already does this in part by outputting its results in natural language. Here, however, the tool lacks the addressee orientation that is essential in rhetoric and the maintenance of appropriateness (aptum). Consensus always outputs its answers in the same style. Nevertheless, communication must be adapted to the target group depending on who it is intended to reach. For example, the same content must be formulated very differently depending on whether it is intended to reach children, academics, a specialist audience, skeptics, readers of a newspaper or social media users. Consensus cannot offer this customization, so the final formulation must be done by the users of the tool themselves.

Usage in Science Communication

Consensus offers a wide range of applications for science communication. It provides its users with a good overview of specific questions in specific fields of research, provides them with further sources, core theses and a presentation of the prevailing scientific opinions. This enables people from outside the field, such as journalists, to gain deep insights into a research field in a short time and without any research effort. In turn, science communicators can generate high-quality content that is based on scientific facts. Consensus prepares the scientific findings in a clear way, which in turn makes it easier for science communicators to understand and pass on the content.

The tool's high standards and the focus on the best and most scientific results — according to specific criteria — also prevent poor-quality research or even misinformation from finding its way into science communication and being disseminated.

However, the specific search settings of the tool also reproduce common biases in the scientific system and reinforce power structures. Marginalized groups and minorities thus have less chance of being heard if, for example, their papers are not even used for the results of Consensus because they have been cited too rarely. The fact that Consensus operates primarily in English and with English sources also plays a major role here. Yet, this is a general phenomenon in science, which predominantly takes place in English.

In addition to the focus on the English-speaking world, it is also clearly visible, as already mentioned, that Consensus does not cover all sciences equally, but primarily the natural and engineering sciences. When it comes to questions in the humanities, for example, the results are thinner and therefore less reliable. The database only includes digitized knowledge and therefore has numerous blank spots in research.

This makes Consensus suitable for use in science communication, but not without restrictions and not equally for all fields of research.

Wrap Up

Consensus is a remarkable tool that enables its users to have questions answered in a scientifically sound, clear and understandable way within a very short time. It has numerous functions that provide a good overview of scientific consensus and the core content of individual research fields, making it a potential aid in everyday life for experts and non-specialists alike. However, the tool also has its limitations in that it maps individual research areas better than others, reinforces prevailing biases and power structures and is not very flexible. It remains to be seen to what extent the developers will continue to expand Consensus and whether this will lay the foundation for new scientific work together with AI.