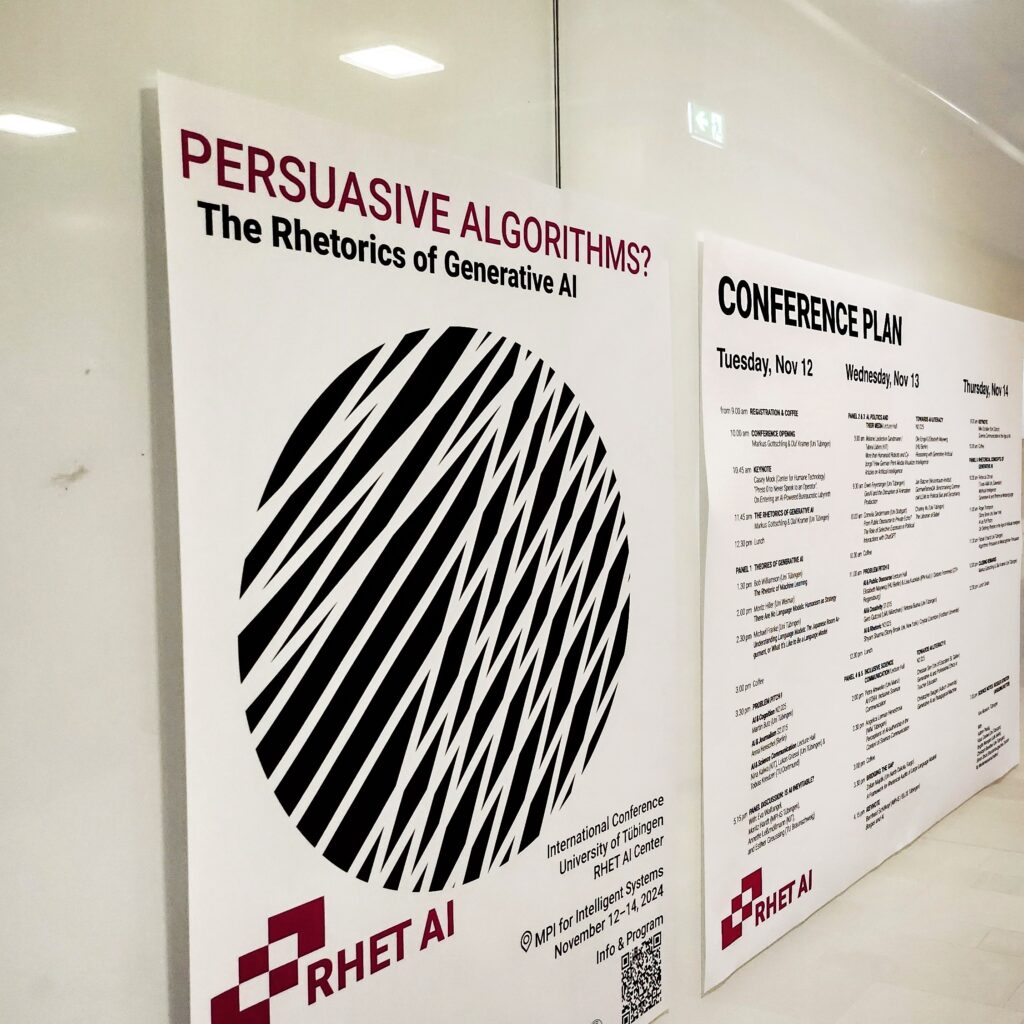

Last year in November, our grand event, the Persuasive Algorithms Conference, took place at the Max-Planck-Institute for Intelligent Systems (MPI-IS) in Tübingen. Participants travelled from many different German universities, such TU Braunschweig, HU Berlin, or LMU München to Tübingen. There were also many attendees from international universities, including researchers from Fordham University in New York, the German-Swiss University St. Gallen, or the Universität Zürich. Altogether, the conference brought together disciplines like journalism, machine learning, education, and, of course, science communication, rhetoric, and media studies. Here in this conference review, you can find out which questions were raised, which perspectives were explored, and which impulses were provided for the discourse on artifical intelligence.

Tuesday, 12th of November 2024

On Tuesday, Olaf Kramer and Markus Gottschling (both from the University of Tübingen) opened the three-day conference and also introduced the RhetAI Coalition. The RhetAI Coalition is an international research network founded by the RHET AI Center in collaboration with Stony Brook University, the Center for Humane Technology, and, since 2024, the Auburn University. The coalition aims to promote the exchange between academia, non-profit partners, and the AI industry in order to develop responsible frameworks for addressing the currently uncontrolled persuasive powers of artificial intelligence.

Among other things, they announced the future collaboration between the RHET AI Center, the Center for Humane Technology, and Stony Brook University. The aim of this partnership is to promote international exchange between the three centres. It will also offer staff the opportunity to spend time at the respective partner locations in Tübingen or New York.

What's Character.AI all about?

Character.AI is classified as a so-called "companion chatbot". Behind this open-access app are its founders, Noam Shazeer and Daniel De Freitas. Millions of users, especially teenagers, use the app to create bots designed to replace human parents, partners, or friends. The bot mimics human behaviour (keyword: anthropomorphism of AI) and usually responds supportively to the complaints of its human counterpart. As a result, its comments can sometimes take a drastic, dark, inappropriate, or even violent turn. It is therefore crucial to always maintain an emotional distance from AI bots.

Following this, the first keynote, "'Press 0 to Never Speak to an Operator': On Entering an AI-Powered Bureaucratic Labyrinth", was delivered by Casey Mock (Center for Humane Technology). Mock focused on the question of who controls the data used to train AI systems. The central issue – artificial intimacy – was illustrated using the case of Character.AI.

Artificial intimacy describes the illusion of a close, personal relationship between humans and machines, created by AI-powered chatbots. Through natural-sounding communication and empathetic responses, these bots simulate human closeness without actually experiencing real emotions or forming genuine bonds. Some people can be misled by this and develop an emotional attachment to the bots, feeling validated and understood by them and seeking their advice instead of turning to their fellow humans.

The final session on the morning programme was a joint lecture by Olaf Kramer and Markus Gottschling on the rhetoric of generative AI, rounding off this first part of the conference.

Photo: @Silvester Keller

Further fascinating presentations followed after a one-hour lunch break, including those by machine learning scientists Bob Williamson and Michael Franke (both University of Tübingen) on the topics "The Rhetoric of Machine Learning" and "Understanding Language Models: The Japanese Room Argument, or What It’s Like to Be a Language Model". These impressed with their intellectual depth, as did the problem pitches that followed after a short coffee break. These were a highlight of the day: as a new conference format that encouraged active participation in discussions, it was highly appreciated by the attendees.

How do problem pitches work?

The "problem pitch" format creates space for participants to discuss problems and questions they encounter during their research on generative AI with other researchers. These issues could be presented in a variety of forms, such as concepts (inter- or transdisciplinary, theoretical, practical, or others). They were to be formulated with the aim of reflection, advice, and the identification of potential solutions. These issues are then discussed in small groups.

The pitches were given by Anna Henschel (editor at Wissenschaftskommunikation.de) with "AI & Journalism" and Nina Kalwa (KIT) together with Lukas Griessl (University of Tübingen) and Tobias Kreutzer (TU Dortmund) on the topic "AI & Science Communication". Henschel used her speaking time to ask the participants whether they were dissatisfied with the current media coverage of AI and to discuss how journalists could use clearer language when communicationg about AI. Kalwa, Griessl, and Kreutzer focused their contribution on the challenges AI poses for science communication.

The goal of the pitches was, on the one hand, to provide researchers with a platform and, on the other hand, to encourage active exchange that would offer the pitchers valuable impulses for their work while simultaneously informing participants about the status of their research. It was also noteworthy that one of the originally planned pitches was cancelled at short notice, but a group spontaneously gathered around the topic, engaging in an intensive discussion despite the lack of prepared input.

To conclude the first day of the conference, tech journalist Eva Wolfangel (MPI-IS) moderated a panel discussion titled "Is AI Inevitable?". In her introduction, she described AI as a "power (struggle) technology". The participants Moritz Hardt (Director MPI-IS Tübingen), Annette Leßmöllmann (KIT) and Esther Greussing (TU Braunschweig) spoke about the transformative impacts of generative AI on communication, education, and fairness. During the discussion, Wolfangel as the host raised the question of who actually has access to AI and who can control it – especially in the context of science communication and public perception. What does authority mean in such a society, and who will exercise it? This question led to further profound reflections: Greussing asked what expertise means today and how it might change when AI acts as an "expert". Leßmöllmann wanted to know how AIs, large language models, or Social Media could support democracy. The discussion quickly made it clear: everyone must actively engange in the AI discourse and find their position in this field.

After this diverse and informative first day of the Persuasive Algorithms conference, participants headed to Neckarmüller, a well-known Tübingen restaurant serving Swabian specialities. This allowed everyone to recharge and start the next day refreshed.

Wednesday, 13th of November 2024

Impressions of the second day of the conference. Photo: @Silvester Keller

On Wednesday morning, two panels focused on the overarching themes "AI, Politics and Their Media" and "Towards AI Literacy". In the first panel, Melanie Leidecker-Sandmann and Tabea Lüders (both KIT) spoke on "More than Humanoid Robots and Cyborgs? How German Print Media Visualize Articles on Artifical Intelligence". The two researchers are also exploring this question in their current preprint, which they are developing together with three other scholars. Other presentations in this panel included "GenAI and the Disruption of Animation Production" by Erwin Feyersinger, an animation expert of media studies at the University of Tübingen, and "From Public Discourse to Private Echo? The Role of Selective Exposure in Political Interactions with ChatGPT" by Cornelia Sindermann (University of Stuttgart).

In a second panel, which took place simultaneously, Ole Engel and Elisabeth Mayweg (HU Berlin) discussed "Reasoning with Generative Artificial Intelligence". Jan Batzner from the Weizenbaum Institute gave a presentation on "GermanPartiesQA: Benchmarking Commercial Large Language Model for Political Bias and Sycophancy" and Charley Wu (University of Tübingen) spoke about "The Librarian of Babel" in the context of AI literacy.

After a break, the conference continued with problem pitches on the topics "AI & Public Discourse", "AI and Creativity" and "AI & Rhetoric". During the pitch "AI & Public Discourse", for example, participants debated various aspects of AI's role in discourse and public discussions in small groups. (Photo: @Silvester Keller)

In the afternoon, participants had the opportunity to gain new insights through further presentations in the panels "Inclusive Science Communication" and "Towards AI Literacy". The topics ranged from "AI Fora: Inclusive Science Communication" to "Generative AI as Pedagogical Machine".

Additionally, Zoltan Majdik (North Dakota State University) explored the topic "Bridging the gap: A Framework for Rhetorical Audits of Large Language Models" in depth, examining how rhetorical elements can be applied to AI systems. He focused particularly on rhetorical tropes, deliberative norms, and semantic complexity. Majdik demonstrated that generative AI often produces metaphors such as war imagery that shape the discourse around AI. He also highlighted the importance of discourse values like validity, which play a crucial role in the acceptance of AI-generated contributions. Majdik emphasised the need to create semantically rich content to make communication more persuasive and impactful. This, in turn, can help integrate AI systems more effectively into existing discourses.

Bernhard Schölkopf (MPI-IS/ELLIS Tübingen) delivered the final keynote of the day. His research draws on Jorge Luis Borges, linking the philosopher's perspectives to artificial intelligence in the modern era. Consequently, his talk focused on the concept of cultural learning, which is central to human understanding and communication but has so far been largely overlooked by AI researchers. Schölkopf's approach advocates training models specifically for cultural learning. This is particularly important because humans often perceive the world through fiction and narratives, making cultural and fictional knowledge essential for human-machine communication. At present, however, AI remains at the level of pattern recognition and is still incapable of understanding causality.

Thursday, 14th of November 2024

Photo: @Silvester Keller

What is an AI-Ouroboros?

Another key topic in Mike Schäfer's talk was the concept of the AI-Ouroboros. This refers to the increasing phenomenon of AI generators being trained on AI-generated content. The term draws from ancienct mythology, where the Ouroboros, a serpent biting its own tail, symbolises a self-perpetuating cycle. Applied to AI, this means that the content produced by artificial intelligence serves as training material for further AI models. This creates a self-reinforcing loop in which AI systems rely on their own outputs to learn and generate new content, potentially reducing human input in the process.

The morning lectures on the final day of the event explored the intersection of literary studies, philosophy, and rhetoric with AI. The day began with a keynote by Mike Schäfer (University of Zürich) on science communication in the age of AI. His talk highlighted the profound and lasting impact of artificial intelligence on science communication – both as an opportunity and a challange. While AI can help foster evidence-based discussions and improve access to information, it also poses risks, particularly in the spread of misinformation. The growing use of AI tools such as JECT.AI illustrates how workflows in journalism and science communication are evolving. These developments make it clear that a critical and responsible approach to AI is essential – not only to harness its potential effectively but also to recognise its limitations.

The final panel of the Persuasive Algorithms conference explored various subtopics under the theme "Rhetorical Concepts of Generative AI". Among the speakers, Fabian Erhardt (University of Tübingen) presented on "Algorithmic Persuasion as Metacognitive Persuasion". In his research, Erhardt examines how AI-driven persuasion strategies relate to metacognitive processes. His analysis focused on whether and to what extent algorithmic forms of communication specifically target metacognitive mechanisms – that is, the ways in which people reflect on their own thinking and beliefs.

After one final shared lunch, many positive remarks, and farewells in the crisp November air, the participants went their separate ways – leaving with a strong desire for a future edition of this multi-day event, which brought together researchers from various disciplines for meaningful exchange. (Photo: @Silvester Keller)

For those interested, the evening featured the Science Notes event at the cinema museum, hosted by Olaf Kramer. As part of the Science and Innovation Days at the University of Tübingen and accompanied by the Cognitive Science Center, the event focused on exploring and discussing strategies for designing online-debates fairer and more constructive, contributing to the strenghening of democratic culture. In short: how can we learn to "argue better".

We would like to thank the organising team and all people contributing who made this outstanding event possible in the first place.

You can find the conference announcement and schedule for 2024 here.