With the "Deus Ex Machina? — Testing AI Tools"-series we want to show you different tools, that aim to simplify writing, design and research by using Artificial Intelligence. More on the "Deus Ex Machina?"-series can be found here.

Overview

Last March, New York-based company Runway released the second generation of its online tool Runway Research. Similar to the text-to-image tools Midjourney and DALL‑E, Runway Research Generation 2 generates new images and videos from text inputs and image templates.

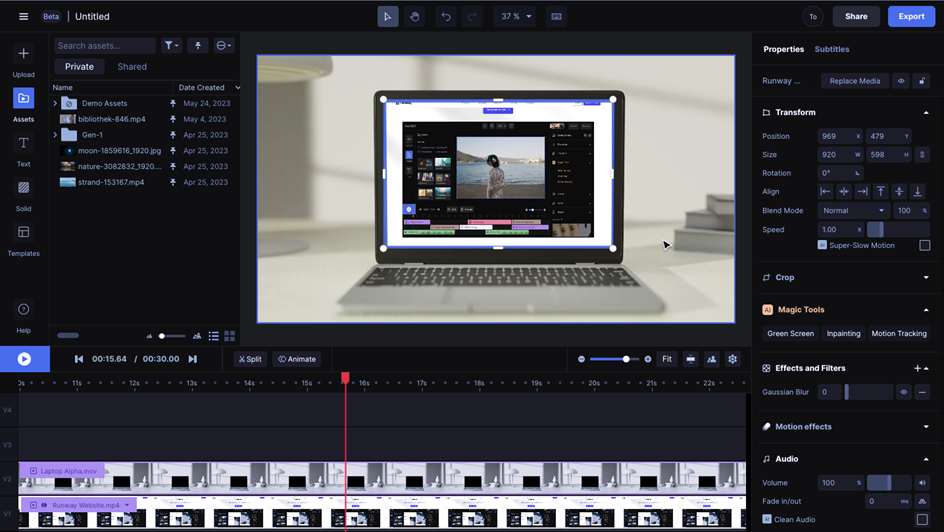

In addition to the browser-based video editor, which is strongly reminiscent of Adobe's Premier Cut or the free editing programme DaVinci, there are also usable templates for audiovisual clips and presentations. These can be personalized with stock material or your own recordings. The editor does not offer any functions for post-processing videos in terms of their brightness and colour; it is more suitable for arranging and cutting finished clips and bringing them together with text and audio.

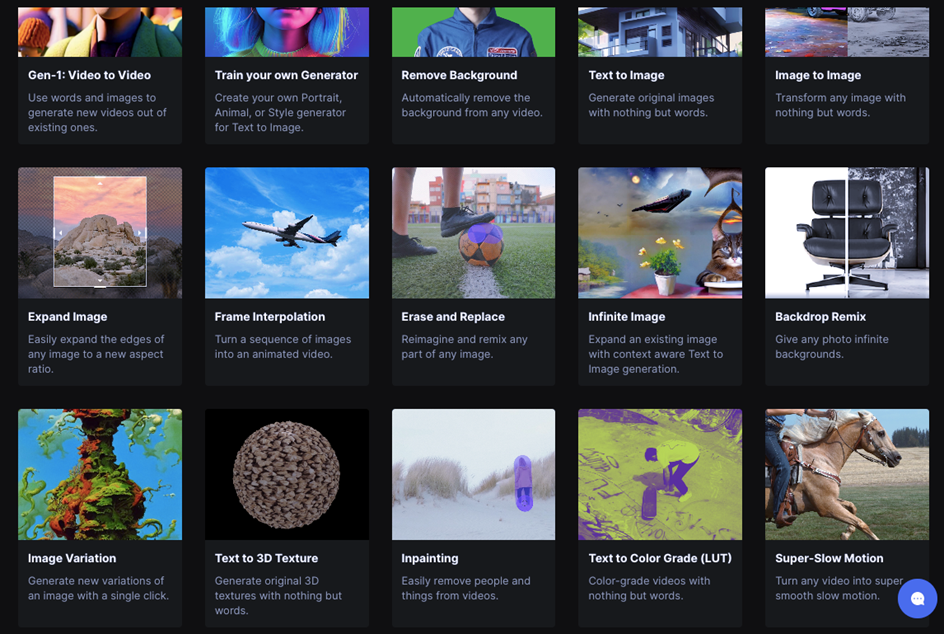

Functions to further edit the clips are offered by the various AI Magic Tools, which can be opened in new tabs. These functions can be used to automatically add subtitles to your own videos, optimize the sound or correct the color. In addition, objects and people can be removed or separated from the background.

All the functions mentioned up to this point can be used after stating an e‑mail address. With the free subscription, the number of total seconds that can be used for editing the moving image are severely limited and there are restrictions in the quality of the export, as well as a limited number of generation attempts. The tool can be upgraded with paid subscriptions, which cost either $12 or $28 a month and allow for higher quality results with no time limit on downloadable media.

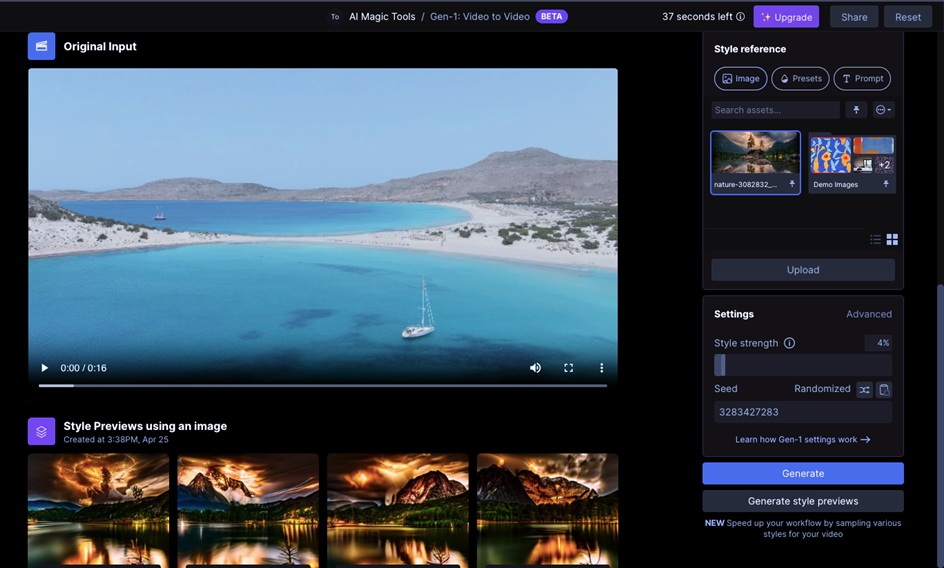

Also free to use and in tradition with the first generation of the tool is the function to generate videos with a trained intelligence. Using an image or text as a prompt, new videos can be compiled and completely new scenes can be created and visual effects incorporated by using already existing videos. Those who have seen this year's seven-time Oscar winner Everything Everywhere All at Once may remember the scene with the two stones, whose movements were animated using this tool (among others). In an interview published on Runway's website, Evan Hadleck, one of the film's visual effect artists, talks about how the tool helps him produce music videos and commercials.

The AI behind the application

In addition to this interview, Runway not only published other reports on filmmakers who use their tool, but also refers to various papers with information on the programming and development of the tool. These are freely accessible via the Cornell University website. However, the documents on Runway Generation 1 provide little information on how it works, especially for those with little to no previous programming knowledge.

The paper on Generation 2 is said to be published later this year and will communicate the development and training of the tool. In the six months since publication, however, no such information has been published and no information on the application and its training can be found. It is also not known yet whether the videos and images uploaded by users are used to train Gen‑2 and may appear in other users' work (as of August 2023).

The rhetorical potential of the tool

More explanation-friendly, on the other hand, are the tutorials that can be found on YouTube for the individual functions. On a separate channel, several videos show how to use the tools and present the "next-generation content creation with artificial intelligence". The motto is often combined with the promise of supporting creative work and reducing costs for the production of visual media, as Designs.ai can be used to create entire films without the need for the often expensive recording of film material.

However, this requires some practice and patience, as authentic-looking results are not always guaranteed and depend on source material and prompt. Representations of non-existent people in a video work less convincingly, as the example video of the library corridor shows. With the addition of the person, the video loses reality (see video 2). For abstract gimmicks, on the other hand, it is worth trying out different commands and settings (see video 3).

Changes to a video through a source image usually work quite reliably. Changing a blue sky to an impressive colour spectacle, as in the following example, works in a few clicks.

Finally, the tool can also imitate well-known "looks" through descriptive text input, which (should) trigger certain associations in the target audience. For example, entering "Wes Anderson Style" results in color-heavy suggestions that are certainly reminiscent of the director's detailed and architectural signature.

Usage in science communication

The structure of the video editor is simple and intuitive to use. It allows basic editing in cut, text and audio. The editing templates offer visually appealing designs that can easily be filled with your own texts and videos. This can be especially helpful for those inexperienced with editing, as they are easy to use and provide reliable results. This is also where the tool's potential lies, that it can also support inexperienced users in the production of audiovisual content. And even if Gen‑2 is primarily intended for filmmakers, it can also be used in science communication to visualize complex content, for example, to create attractive presentations or to cut short clips.

However, trying to further adapt applied templates or to change individual editing steps of the AI can get tricky. This also applies to other functions in which the AI takes over entire editing steps after just one click. Whether removing noise in the sound or color correcting videos: There is no log that shows the changes made by the AI and thus there is no way to precisely track the programs editing steps. Moreover, generated changes cannot be weakened, strengthened or only applied in parts. It is possible to reset the editing and generate it again with changed parameters, but this is time-consuming and costs one generation each time, which are limited in the free account anyway.

A self-experiment with the blue butterfly, which was processed with the image-to-image function, showed how many generations may be necessary until the desired result is achieved. The combination of image and prompt was supposed to create a new image according to the specifications.

The attempt to place the butterfly on a horse by prompt was not successful. Instead of a second animal, the AI generated a horse pasture and a stable in the image background. The recoloration of the butterfly worked well — even though it was not asked for in the prompt.

The "Infinite Image" function, in which images are supplemented by textual descriptions of individual objects, often delivers convincing results. Although there are still minor logic errors such as false shadows and partly surreal compositions, most of the results are almost credible, especially in comparison to the generated videos.

What was part of the original picture can still be determined quite well here, because the added objects show inconsistencies. For more realistic additions, for example of the dog, post-processing of light, shadow, color and highlights would be helpful, but these are not possible with the tool at the moment. Instead, several images can be output per prompt, from which the best image can be selected.

Due to the multitude of different functions, the tool offers the most diverse possibilities of use in the production of audiovisual content, whereby the creation of artificial photographs and videos in particular is in the foreground. The image manipulation functions make it possible to illustrate ideas quickly, as authentic results are generated through text or image input. Especially in the communication of scientific content, this opens up new possibilities for visualizing content. For example, climate researchers could use the tool to draw attention to the consequences of climate change by generating photo-realistic images that already show the ecological effects of a rise in temperature. In particular, social media contributions or presentations can be supplemented with appealing visualizations.

Wrap-Up

Even if some results are still expandable, the tool offers a quick and easy way to visualize design ideas in a new way. It can shorten production processes and allows editing and image manipulation without the need for prior technical knowledge. Of particular interest for use in science communication could be the visualization of abstract or future scenarios, such as the impact of climate change on our ecosystem. However, not all projects succeed right away, which is why working with Gen‑2 by Runway Research requires a lot of trial and error, and especially personalizing and adapting the results requires some patience and creativity in choosing the right prompts. The simple operation invites exactly that and the generated results are fun, even if a good result is not guaranteed with every gimmick.